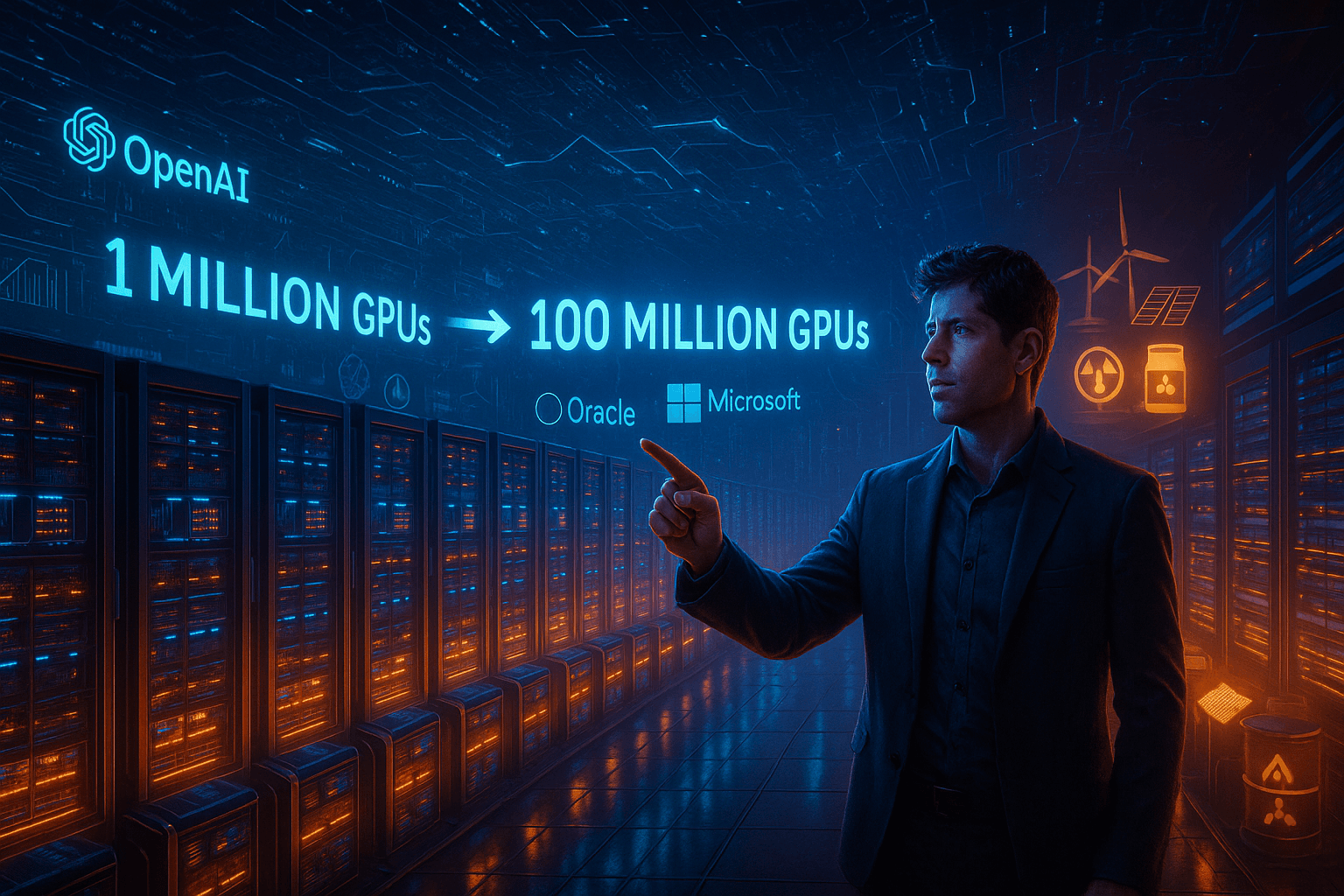

OpenAI 1 Million GPUs is making headlines as Sam Altman, the visionary CEO of OpenAI, proudly announced that his team will bring over 1 million GPUs online by the end of 2025. This staggering milestone reflects OpenAI’s relentless pursuit of advancing AI capabilities.

However, Altman isn’t stopping there—his bold challenge to increase this number by a factor of 100, aiming for 100 million GPUs, has ignited global curiosity and debate. Announced on July 20, 2025, via a post on X, this ambitious plan underscores OpenAI’s commitment to pushing the boundaries of AI infrastructure.

Sam Altman’s Ambitious 100x Goal

Sam Altman’s declaration to 100x the OpenAI 1 Million GPUs count is both a flex and a call to action. In his X post, he expressed pride in his team’s achievement while humorously urging them to “get to work figuring out how to 100x that lol.” This audacious target of 100 million GPUs is less about an immediate rollout and more about setting a long-term vision for artificial general intelligence (AGI). Altman’s track record—having faced GPU shortages that delayed GPT-4.5 in February 2025—suggests this isn’t mere bravado but a strategic pivot to address compute bottlenecks.

The 100x goal implies a shift from scale to innovation, potentially involving custom chips, energy solutions, and global partnerships. While the “lol” adds a lighthearted tone, the underlying message is clear: OpenAI aims to lead the AI revolution by rethinking compute infrastructure. This vision aligns with Altman’s earlier $7 trillion pitch to overhaul the global semiconductor industry, highlighting the scale of ambition behind OpenAI 1 Million GPUs.

The Current State of OpenAI’s GPU Infrastructure

As of mid-2025, OpenAI is on track to deploy over 1 million GPUs by year-end, a feat that positions it as the largest consumer of AI compute globally. This surpasses xAI’s 200,000 Nvidia H100 GPUs powering Grok 4, marking a fivefold lead. The infrastructure supporting OpenAI 1 Million GPUs includes the world’s largest AI data center in Texas, bolstered by partnerships with Microsoft Azure, Oracle, and potential explorations into Google TPUs.

This rapid expansion follows a GPU shortage that forced OpenAI to stagger GPT-4.5’s rollout earlier this year. Altman’s team has since prioritized scaling, adding tens of thousands of GPUs weekly. The current setup leverages Nvidia’s high-end hardware, but diversification into custom silicon and alternative accelerators hints at a robust, multi-faceted strategy. This milestone is a testament to OpenAI’s aggressive growth, setting the stage for the 100x challenge.

Challenges of Scaling to 100 Million GPUs

Achieving 100 million GPUs is a Herculean task, fraught with challenges. Financially, the cost is estimated at $3 trillion based on current Nvidia GPU prices of $30,000 each, nearly the GDP of the UK. Manufacturing constraints mean Nvidia couldn’t produce this volume in the near term, necessitating breakthroughs in chip production capacity.

Energy demands pose another hurdle. A single high-end GPU can consume as much power as a small household, meaning 100 million GPUs could strain global power grids. Cooling and data center space requirements further complicate the equation. Altman’s team must innovate in energy efficiency, possibly exploring nuclear power or advanced cooling systems, to make OpenAI 1 Million GPUs a stepping stone to this goal. Skeptics argue this is aspirational, but it drives the industry toward sustainable solutions.

Technological Innovations Driving the Vision

To realize the 100x vision, OpenAI is likely investing in cutting-edge technologies. Custom silicon, hinted at by Altman, could optimize performance and reduce reliance on Nvidia. Partnerships with Oracle for data centers and potential TPU integration with Google suggest a diversified compute stack. High-bandwidth memory (HBM) and new architectures are also in play, enhancing efficiency for OpenAI 1 Million GPUs.

AI-driven optimization of training processes could further stretch existing resources, while advancements in energy storage, perhaps inspired by xAI’s Tesla battery-backed Colossus, might address power needs. These innovations are critical to scaling from 1 million to 100 million GPUs, positioning OpenAI as a pioneer in AI hardware.

Industry Impact and Competition

The OpenAI 1 Million GPUs milestone and 100x goal are reshaping the AI landscape. Competitors like xAI (targeting 50 million GPUs by 2030), Meta (building 300,000+ GPU clusters), and Google (leveraging millions of TPUs) are accelerating their own expansions. This compute arms race is driving demand for GPUs, benefiting Nvidia and prompting others to develop in-house chips.

The ripple effect includes rising hardware costs and energy debates, with OpenAI’s scale amplifying sustainability concerns. Altman’s vision could force industry standards to evolve, fostering collaboration on energy and manufacturing. However, it also risks overextension, as seen with OpenAI’s delayed Stargate project, highlighting the tightrope between ambition and execution.

Future Implications for AI Development

If OpenAI succeeds in scaling to 100 million GPUs, the implications for AI are profound. Enhanced compute power could accelerate AGI research, enabling models to rival human cognition by the 2030s, as Altman predicts. This could revolutionize fields like healthcare, education, and autonomous systems, but it raises ethical questions about control and job displacement.

The energy and cost challenges might spur global AI regulations or public-private partnerships. For users, faster, more capable AI tools like ChatGPT could emerge, though subscription costs might rise. OpenAI 1 Million GPUs is just the beginning, with the 100x vision potentially redefining technological progress.

Conclusion

OpenAI 1 Million GPUs mark a pivotal moment in AI history, with Sam Altman’s 100x vision pushing the envelope further. While the current 1 million GPU target is within reach by December 2025, the leap to 100 million demands innovation in finance, energy, and technology. This ambitious plan not only showcases OpenAI’s leadership but also challenges the industry to rethink AI infrastructure.

As the race intensifies, the global tech community watches closely. Will Altman’s dream become reality, or will it expose the limits of current capabilities?

Share your thoughts below—do you see this as a game-changer or a stretch too far?